1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

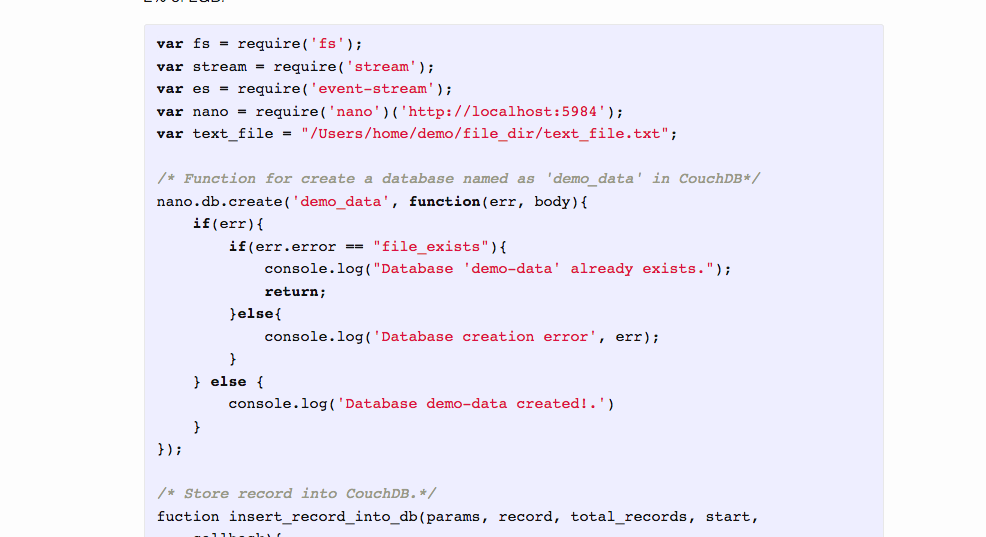

| var fs = require('fs');

var stream = require('stream');

var es = require('event-stream');

var nano = require('nano')('http://localhost:5984');

var text_file = "/Users/home/demo/file_dir/text_file.txt";

nano.db.create('demo_data', function(err, body){

if(err){

if(err.error == "file_exists"){

console.log("Database 'demo-data' already exists.");

return;

}else{

console.log('Database creation error', err);

}

} else {

console.log('Database demo-data created!.')

}

});

fuction insert_record_into_db(params, record, total_records, start,

callback){

db2.insert(params, function(err, body){

var end = new Date().getTime();

var time = end - start;

if(err) {

console.log('Errror: '+ key, err.message)

callback;

return;

}else{

console.log('You have inserted record: ', key, "record no: " ,

++record, "from Total recodrds", total_records, "Time Elapsed: "

+ time + "mseconds.");

callback;

}

});

}

function read_line_by_line(file, total_records){

var record = 0;

var start = new Date().getTime();

var line_no = 1;

s= fs.createReadStream(file)

.pipe(es.split())

.pipe(es.mapSync(function(line){

s.pause();

line_no += 1;

(function(){

if(line){

var json = JSON.parse(line.slice(line.indexOf("{"),

(line.lastIndexOf("}") + 1)));

var key = json["key"];

var type = json["type"]["key"];

var last_modified = json["last_modified"]["value"];

var revision = json["revision"];

var parms = {_id: key, type: type,

modified_at:last_modified, rev:revision, json:json};

insert_record_into_db(parms, record, total_records, start,

s.resume());

}

})();

}).on('error', function(){

console.log('Error while reading file.');

}).on('end', function(){

console.log("Reading entirefile.");

})

);

}

var total_records = 1

s = fs.createReadStream(text_file)

.pipe(es.split())

.pipe(es.mapSync(function(line){

s.pause();

total_records += 1;

(function(){

s.resume();

})();

})

.on('error', function(){

console.log('Error while reading file.');

})

.on('end', function(){

read_line_by_line("file.txt", total_records)

})

);

|